[Editor’s note: Dr. Gorski is on vacation this week (for the first time since the pandemic started, he’s actually heading to the lake for a few days). Fortunately, we have a guest post by William F. Paolo, MD, associate professor of emergency medicine and public health and preventative medicine at SUNY Upstate, which continues our Ivermectin is the new hydroxychloroquine series. This time, Dr. Paolo directly addresses the misinformation about ivermectin promoted by evolutionary biologist and podcaster Bret Weinstein, in particular, his misrepresentation and misunderstanding of meta-analyses.]

The ongoing COVID-19 epidemic has resulted in a large group of non-medical or public-health related experts with the surprising confidence to publicly express definitive opinions on topics clearly outside of their expertise. The most striking example of this has come from the evolutionary biologist Dr. Bret Weinstein, who has turned his elevation in the public eye from issues pertaining to culture to one regarding the assessment of science. This has, most toxically, manifest in sweeping and non-empirical claims regarding the role of ivermectin in the treatment and prophylaxis of the SARS-Cov-2 related disease COVID-19.

What Bret Weinstein claims

The claims of Dr. Weinstein in terms of the efficacy of ivermectin for SARS-Cov-2, if true, would render it one of the most astonishingly effective xenobiotics in the history of modern medicine. The claims are variable and, mostly, cunningly phrased as exploratory questioning, but the following have been either stated by Dr. Weinstein or endorsed by guests on his podcast. Ivermectin “shows itself to be about 86 percent effective at preventing contraction of Covid“. Ivermectin could be a “safe and effective medicine that prevented 85% of COVID cases and did not appear to select for different strains“.

As these assertions and “questions” have made their way into multiple different podcasts and have been picked up in the mainstream press, the alleged “suppression” of this information has also become a popular theory – one which seemingly demonstrates a volitional and diffuse campaign to suppress this knowledge. Finally, as ever, one cannot help but note the simultaneous appearance of numerous unfounded mRNA vaccine assertions based on anecdote or discredited theories coinciding with the assertions about the wonders of ivermectin. The evidence for the efficacy and safety of the mRNA vaccines is astounding whereas the evidence that ivermectin may benefit is small and teeming with methodological and study design flaws. One can’t help but wonder how an individual, with any history of training in scientific reasoning, can look so credulously at the small and poorly designed ivermectin studies and find a miracle drug while simultaneously dismissing the well-designed large trials and ongoing safety monitoring that has given us the success of the mRNA COVID vaccines.

To assess the reliability of these claims it is imperative to critically appraise the same literature Dr. Weinstein is citing. Multiple small studies comprise our knowledge and all are compiled into two recent meta-analyses, Bryant et al. and Hill et al., all of which are of low quality and one, Hill et al., has been recognized by some experts as being of “critically low quality“. It is hard not to note that this is similar to what was advocates did arguing for the efficacy of hydroxychloroquine over a year ago, only taken to a much greater extreme. As you will see, on this score too, ivermectin is the new hydroxychloroquine.

Misunderstanding meta-analyses

One of the more bizarre claims made by Dr. Weinstein is that “…large RCTs amplify systematic error in addition to signal, whereas meta-analysis amplifies signal, and corrects for error“. To be as generous as possible – my presumption is that this is a pithy way of attempting to make the following assertion:

Randomized controlled trials (RCTs), whether they are large or small, are singular experiments and, though they are understood to be the experimental gold standard to determine causation, are only as good as their methodology. If a large RCT has errors in its design all the results are fruits of the poisonous tree and are a methodological house of cards that can make erroneous results seem more or less likely. On the other hand, meta-analyses, as they are the combination of multiple studies into a singular result to find the larger point estimated effect of smaller RCTs, will mitigate for the errors of singular RCTs by merging the world’s available literature into one pooled result. In this way the combination of multiple studies overcomes the biasing effects of smaller or poorly done studies and the true “signal” (that which is the actual real-world result) becomes more apparent in the summated result.

Though this sounds intuitively true, it is entirely without an understanding of the assessment and critical appraisal of meta-analysis, and philosophical underpinnings of role of its role within the hierarchy of evidence.

Limitations and role of meta-analyses

A meta-analysis is a subcomponent of a systematic review in which it is held that multiple studies addressing a single topic can be combined into a composite point estimate (and confidence bounds) to determine the pooled results of multiple smaller studies. Methodologically the review treats individual experimental studies as its participants, defining inclusion and exclusion criteria based upon the question under investigation and the ability of the discovered studies to bring the question to a parsimonious resolution. After review, the studies are analyzed for bias and methodological soundness, weighted, and analyzed separately and/or together to develop a result that represents the newly derived estimate of effect based upon the combined included studies.

The soundness of a systemic review is determined by its internal methodology and the assessment of the included studies and is not intrinsic to the included studies themselves. However, the summated results of the review are in fact predicated on the validity and reliability of each included study and cannot be analyzed independent of the cogency of the studies underpinning its new result. In other words, it is decidedly not true that one can mitigate for studies with poor methodology by combining them with more poor studies despite the assertion of Dr. Weinstein above. As with the results of a computer program algorithm, a maxim long understood and repeated by computer programmers, “Garbage In, Garbage Out” (often abbreviated GIGO) applies. Or, as SBM editor Dr. Gorski likes to put it on Twitter:

This us almost exactly wrong. Meta-analyses are like computer programs: Garbage in, garbage out. You can't turn a bunch of turds into gold through meta-analysis, which is what #ivermectin advocates have tried to do. https://t.co/lbsVy76MrR

— David Gorski, MD, PhD (@gorskon) July 16, 2021

Which he elaborated on here:

Sure. They seem to think that smoothing out randomness in studies that don't have a high degree of bias is the same thing as eliminating bias. Again, garbage in, garbage out. Meta-analysis can't magically turn a bunch of turds into a gold ingot.

— David Gorski, MD, PhD (@gorskon) July 29, 2021

Systematic reviews can be well or poorly done, like any other scientific endeavor, and are not, by virtue of merely being of a kind, automatically understood to be high-level evidence. They are, however, recognized in the hierarchy of evidence as the apex of the EBM pyramid because science relies on experimental reproducibility and a study with similar results done multiple times is much more powerful than a single RCT done once. However, this does not mean that a systematic review and/or a meta-analysis in and of itself, independent of the component studies or the assessment of experts, is automatically high-level evidence.

That data can be combined to find a single result is obvious, that it is scientifically sound or reliable to do so is the question posed when critically apprising a performed review. That said, it is simply not true that the plural of garbage is evidence, to put it less sarcastically than Dr. Gorski. Each individual meta-analysis is only as good as the studies that underpin it, without being able to supersede its components. The combination of multiple bad studies does not amplify signal and if it indeed does, there is no reason to trust the reliability of that signal, given the unreliability of the studies that comprise the finding. Garbage in still equals garbage out.

Limitations of included studies

The multiple studies that comprise the ivermectin literature are not of high enough quality evidence to make definitive recommendations. There are numerous methodological flaws in each component study that do not cancel themselves out simply by aggregating with one another. Not analyzed are the in-vitro studies that demonstrate the biological plausibility of ivermectin’s role in mitigating SARS-Cov-2 infection. Though mechanistic and in-vitro studies are helpful they are far from definitive in terms of relevant patient-oriented-outcomes (reductions in infections, morbidity, and mortality) and many plausible therapeutics fail to demonstrate efficacy when trialed in human subjects.

In terms of prophylaxis there are numerous low quality studies that comprise the world’s literature with the net result suggesting a potential non-definitive benefit of ivermectin. However, the trials all suffer from numerous methodological flaws such as an observational study based upon potential administration of ivermectin without controlling for dose, testing, other therapeutic agents, demographics, or epidemiological data, a case controlled trial that did not control for dosage, usage, or other concomitant prophylactic agents, or the largest contributor to the positive body of evidence that was retracted for plagiarism and ethical violations. It is simply false to assert that the combination of these poor studies is greater and independent from its component parts. Though it may be true that ivermectin is a safe and effective prophylaxis the accumulation of low-quality studies does not change that assertion to being more likely true than its negation. To utilize these studies as the foundation for making the assertion that it is true, or even likely, that ivermectin offers an 86% rate in reducing the contraction of SARS-Cov-2, is spurious and is not in keeping with a rationally critical assessment of the body of evidence.

The studies that pertain to the ability for ivermectin to prevent mortality in SARS-Cov-2 suffer from similar limitations. They include a study that utilizes a non-blinded convenience sample without reliable randomization, a study in which ivermectin patients also received higher doses of steroids which reduced its ability study to control for the effects of ivermectin in isolation, a prospective trial of 24 patients with no patient-oriented-outcomes, and, importantly, a well designed randomized controlled trial that did not find ivermectin to be efficacious. Unsurprisingly, as with prophylaxis, the evidence does not support a definitive positive pronouncement on the effects of ivermectin and certainly does not approach the level of evidence to be able to state confidentially what effects (if any) it has – let alone the assertion of 99% efficacy. Finally, other than the theoretical assertion that ivermectin does not induce resistance, at this time there is no evidence with the medical literature to suggest this is true.

A sound scientific mind would assess the evidence, critically appraise the studies involved, and conclude that there is an interesting hypothesis to be explored, but it is impossible to make definitive statements as to the efficacy of ivermectin as a prophylaxis or treatment until larger, better studies are done. Oddly, this is exactly what has been done without the need to posit a non-parsimonious distributed suppression theory.

Statistical heterogeneity

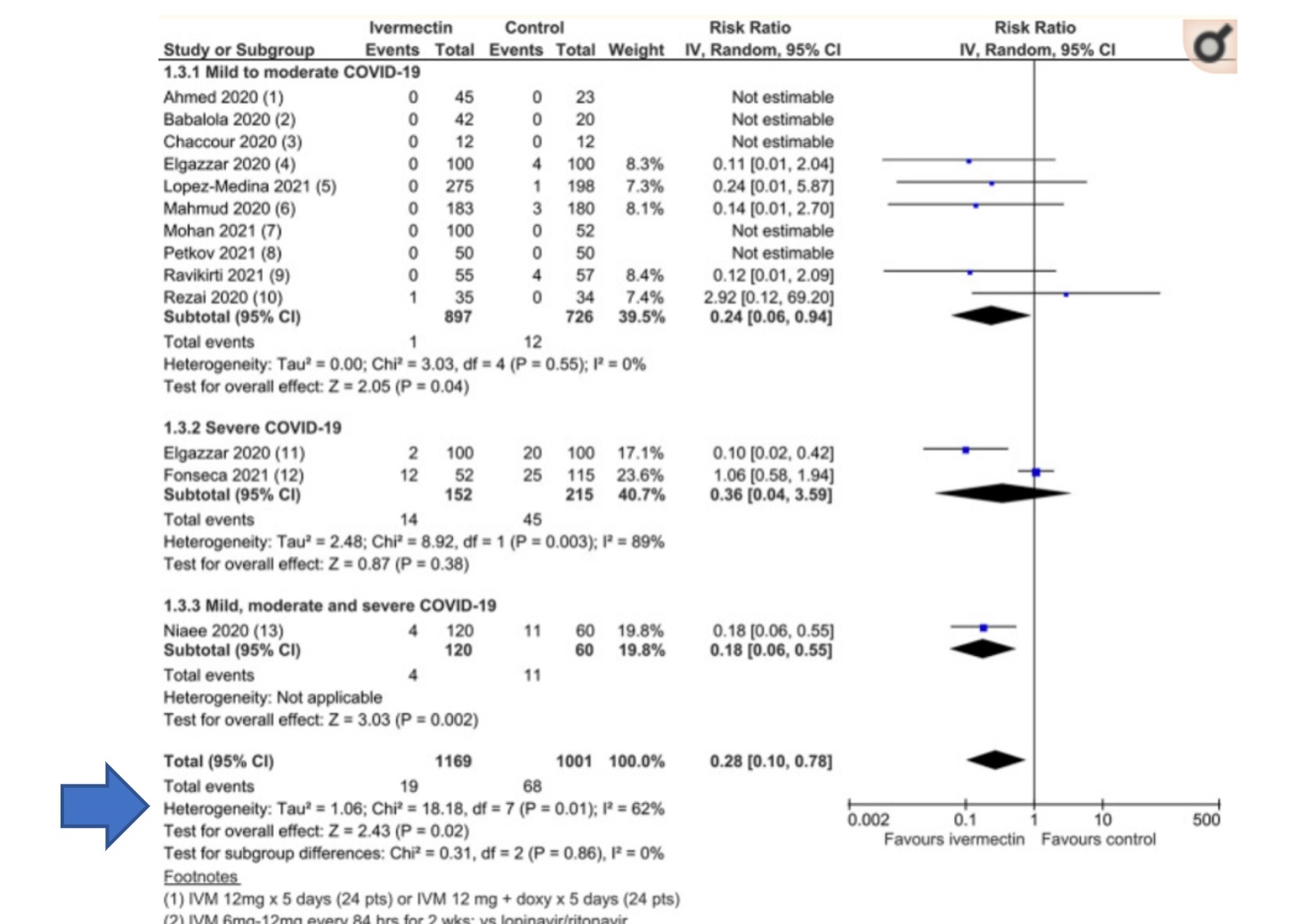

This is a forest plot, a type of graphical display of estimated results from the studies in a meta-analysis, along with the overall results, taken from a recent meta-analysis by Bryant et al. This particular meta-analysis was widely touted by ivermectin advocates, including Bret Weinstein, despite its rather glaring flaws (discussed previously on SBM, along with the conspiracy theories promoted by some of its authors, in particular Tess Lawrie):

Another component not discussed by Dr. Weinstein when giving full uncritical scientific credence to the ivermectin literature, and one that undermines his point that meta-analysis the de facto gold standard information, is statistical heterogeneity. It is also a component not discussed in previous SBM posts on this meta-analysis, which makes this a good time to bring it up. When analyzing the results of a meta-analysis it is imperative to focus on the degree of statistical heterogeneity to determine if the resultant point estimate of the compiled studies are, in fact, similar enough to be combined into a singular parsimonious estimate of effect. Theoretically, multiple smaller studies can be pooled and analyzed as a singular body of evidence if the component studies are of analogous design and methodology (allocation concealment, randomization, intention to treat analysis etc.) or rejected if this is not the case. It is required that the included studies be the same in terms of design, inclusion and exclusion criteria, demographics, equivalent doses of an intervention, outcomes, and a myriad of other factors to comfortably combine said studies into a unitary body of evidence. Concordant with this is the expectation that if the included studies of a meta-analysis are of equal kind that experimentally derived results, taken under similar conditions, should roughly approximate one another. In other words, all things being equal, the same exact experiment done in multiple places by different people should roughly find the same results if the studies are in fact similar enough to be analyzed as one.

The test to determine the heterogeneity, or the difference of the experimental results, is denoted in the arrow from the Forest plot presented above. There are several different ways to measure the heterogeneity of data but the most accessible, and understandable, measurement, is the I2 statistic that assesses the percentage of variance that is owing to heterogeneity. In general, I2is measuring the degree of variance attributable to heterogeneity with higher numbers representing more heterogeneity and lower numbers repressing a more homogenous data set. Opinions vary on the optimal percentages to categorize the impact of statistical heterogeneity but, in general, the following rules of thumb apply when determining impact of heterogeneity on the meta-analysis in question:

- 0% to 40%: might not be important

- 30% to 60%: may represent moderate heterogeneity

- 50% to 90%: may represent substantial heterogeneity

- 75% to 100%: considerable heterogeneity

The I2 calculated for this dataset is 62%, indicating a substantial risk that the summated result provided in the “total” row derives from multiple studies that did not find the same thing and therefore is not easily reducible to a singular reliable summated estimated effect. This is an unsurprising result given the variable methodologies and definitions of the included and analyzed studies. It is possible to combine these studies and produce a single result however the combination of multiple studies does not therefore, invariably, result in reliable data.

By way of example, it would be possible to combine multiple studies looking at aspirin for the prevention of death in myocardial infarctions into a single result and claim new knowledge under the guise of empiricism and the patina of science. However, if said studies had variable definitions of myocardial infarction, different means of follow-up, differing measures of outcomes of interest, and variable dosing regimens of aspirin, the singular discovered data point is no more a real-world reflection of the actual efficacy of aspirin than were the individual studies on their own. The combination of studies that are not the same does not get us closer to the question we are asking as the amalgamation of said studies does not, in and of itself, mitigate for the need for investigational data to be consistent with one another owing to similar experimental parameters. Such is the case with both ivermectin meta-analyses, even in their initial form with the subsequently retracted study intact, owing to the variability of the small studies included in the analysis.

Conclusions

There is simply not enough evidence, at this point, to support the efficacy of ivermectin as a novel therapeutic for SARS-Cov-2 infection and certainly zero evidence for the striking claims made by Dr. Weinstein. The low-quality studies that comprise the scientific literature demonstrate that the hypothesis that ivermectin is associated with a reduction in mortality and/or transmission may be true, but the negation of this hypothesis, on a Bayesian analysis of in vitro data and the current total body of evidence, is even more likely. A mundane reading of the evidence implies, like many therapeutics before, that there is not enough evidence in either direction suggesting the need for well-done randomized controlled trials. Many other promising therapeutics have gone through similar iterations in terms of early promise in small studies followed by confirmation or rejection in larger well-designed trials. The distinction, in the case of ivermectin, is its attachment to an individual and a movement amplified via conspiratorial reasoning and anti-vaccine sentiment.

A scientific mind, at its best, modifies hypotheses considering empirical evidence derived from experimentation or observation and attempts to fit hypotheses to the data derived. Unscientific reasoning begins with an assumed conclusion and manipulates existing and future data to fit the foregone conclusion. This sort of thinking is known as backwards reasoning and, in its most extreme, produces a cognitive bias known as anchoring in which an individual will use a particular “anchored” conclusion to fit all subsequent data. There seems to be little in the critical appraisal of the evidence or in the subsequent retraction of the largest positive study to alter Dr. Weinstein’s foundational assumption that ivermectin is an effective therapeutic for SARS-CoV-2 infection, demonstrating his unscientific approach to the assessment of evidence.

The striking thing to note, in the case of Dr. Weinstein, is how his reasoning shifts depending on whether ivermectin is being discussed or the mRNA COVID vaccines. Large, well-conducted mRNA trials are dismissed out of hand whereas anecdotes or wild speculation and false claims are elevated to the level of meaningful data. The role of systematic reviews and meta-analysis are overstated and misunderstood whereas false claims by misleading “experts” are passed off as evidence contradicting his earlier assertions regarding evidentiary hierarchies. In a holistic view it appears, in terms of his understanding of ivermectin, Dr. Weinstein either doesn’t know, doesn’t care, or doesn’t understand what the evidence shows – I’m not certain which is the preferred option.

Ivermectin advocates (many of whom, albeit not all, advocated hydroxychloroquine to treat COVID-19 this time last year) have apparently learned from the experience of seeing one “miracle drug” for COVID-19 fail as more randomized clinical trials showed no evidence of efficacy. Although it is possible (albeit unlikely) that well-designed randomized clinical trials will show that ivermectin has efficacy against COVID-19, the very same thing appears happening now, over a year later, for ivermectin. Meanwhile, ivermectin advocates like Bret Weinstein are still trying to portray it as a wonder drug based on a badly flawed understanding of what meta-analysis can and cannot do.