The heart of Federico Infascelli’s questionable claims.

Retraction Watch is a great website. As the name implies, it focuses on a key aspect of quality control in science, the retraction of scientific papers that have already passed peer-review and were published when serious concerns about those papers come to light.

Retracting published papers is similar to phase IV clinical trials – tracking side effects of drugs that have already been approved and are on the market so regulatory agencies can monitor for post-marketing concerns and recall the drug if necessary.

Infascelli’s woes

Recently the journal animal retracted a paper by Italian researcher, Federico Infascelli. Here is there announcement:

From late September 2015, we received several expressions of concern from third parties that the electrophoresis gels presented might have been subject to unwarranted digital manipulations (added and hidden bands or zones, including in the control samples and the DNA ladder). A detailed independent investigation was carried out by animal in accordance with the Committee on Publication Ethics (COPE) guidelines. This investigation included an analysis of the claims using the figures as submitted, and reassessment of the article by one of the original peer-reviewers in light of the results of the analysis. The authors were notified of our concerns and asked to account for the observed irregularities. In the absence of a satisfactory explanation, the institution was asked to investigate further. The University of Naples concluded that multiple heterogeneities were likely attributable to digital manipulation, raising serious doubts on the reliability of the findings.

Based on the results of all investigations, we have decided to retract the article.

It looks to me like the authors of retraction statement are trying very hard not to use the words, “scientific fraud.” The “observed irregularities” essentially were due to alleged digital manipulation of images of electrophresis gels. Retraction Watch recounts a Nature report which stated:

…sections of images of electrophoresis gels appeared to have been obliterated, and some of the images in different papers appeared to be identical but with captions describing different experiments.

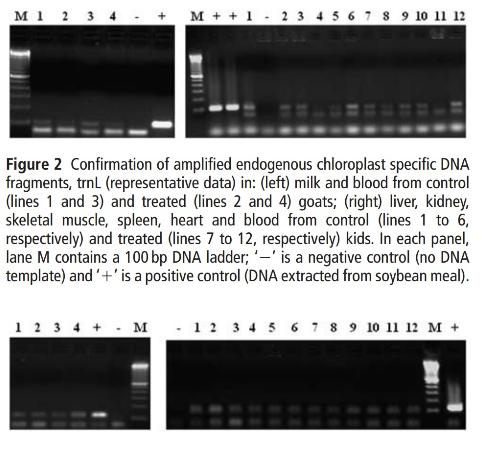

The included picture is taken from the now-retracted article, showing images of electrophoresis gels. Let’s be clear – these images are the data. Those bands represent identified pieces of DNA. When you add or delete bands from the images, you are manipulating the data. Data manipulation is scientific fraud.

This is the second paper by Infascelli retracted due to concerns over data manipulation (the first was retracted in January). A third is under investigation but has not yet been retracted. All of these papers were used to raise concerns about the safety of genetically modified crops and have been used for anti-GMO lobbying.

Given this revelation, I don’t see how any research by Infascelli or his team can ever be trusted. He now joins Seralini as a researcher who publishes dubious articles which seem motivated by an anti-GMO ideology. In Seralini’s case he was not accused of fraud, just egregiously poor scientific rigor.

A deeper problem

This one paper is not an isolated event. Nor is the problem unique to Infascelli, or even anti-GMO papers. There are two aspects to this phenomenon I want to consider – scientific fraud in general, and the emergence of an ideologically-motivated scientific fringe or subculture.

Scientific fraud is increasingly being recognized as a serious problem. I don’t want to overstate the issue – most scientific studies are perfectly legitimate, and the system tends to work itself out over the long haul. Fraud is the exception within the scientific literature, but it happens often enough to be a serious problem.

Estimating the prevalence of fraud (or more generally, “misconduct”) is difficult, because first you have to agree on an operational definition. Then there is the problem of finding and reporting all cases of misconduct. A recent paper estimates that there have been hundreds of recent cases of exposed misconduct in the US, and about 50 cases per year in the UK alone.

An anonymous survey sent to researchers found that 2% admitted to outright fabrication of data. However, 33% admitted to some dodgy research practices. These practices include throwing out data that contradicts their hypothesis, deliberately fudging the design of studies in order to get a desired result, and altering a study design at the request of the sponsor.

Fudging the study design has a powerful effect on the outcome. Simmons et al showed that such design manipulation can generate a false positive result to a 0.05 significance from dead-negative data 60% of the time.

All of this is why independent replication is so important. This is part of the reason we set the threshold for being convinced by scientific evidence as high as we do – it has to rise above the noise of false positives. That noise is a lot louder than many people realize. Small studies, one-offs, or outcomes that only seem to come from one researcher, are just not convincing.

In addition to the background noise of varying degrees of scientific misconduct, there appear to be dedicated scientific subcultures that always seem to produce results that are against the scientific mainstream but consistent support a particular ideological position.

There are a few researchers who consistently produce results which call into question the safety of GMOs, for example, despite the fact that the rest of the scientific world finds that genetically modified foods are safe for human consumption.

There are a few researchers who consistently produce results which call into question the safety of vaccines, despite the fact that the rest of the scientific world finds that approved vaccines are generally safe and effective.

The same can be said about anthropogenic global warming and the safety of cell phones. Unfortunately, these outlier researchers produce the impression that there is more of a controversy than there really is. They create “two sides,” and the press often misses the fact that those sides are decidedly asymmetrical.

They also produce scientific studies that can be cited by those with a particular political or ideological opinion, giving their position the false appearance of being science-based. Work by Seralini and Infascelli has become the centerpiece for anti-GMO lobbying, for example.

Solutions to scientific misconduct?

Fortunately, I think we are already heading in the direction of addressing the issue of scientific misconduct. Efforts like Retraction Watch are helping. Journal editors need to have more of a process in place to sniff out fraud and misconduct during the peer-review process. Failing that, any concerns about misconduct raised post-publication need to be taken seriously, investigated, and then transparently corrected when necessary.

Obviously when a researcher is found to have committed brazen fraud, their career must be over. Their research can never be trusted again.

However, there is a vast gray zone of scientific misconduct that can be addressed through education. Researchers need to be explicitly trained about the nature of scientific misconduct, how to avoid it, and put on notice that such misconduct will not be tolerated.

The institution of science depends upon both transparency and trust. That trust is a product of the culture of the institution. Scientific culture cannot tolerate cutting corners, looking the other way, compromising rigor to get a desired outcome, fudging protocols, or yielding to pressure.

To a large extent the culture of science does promote honesty and transparency, but it seems we need to make a push even further in that direction.