Mammography is a topic that, as a breast surgeon, I can’t get away from. It’s a tool that those of us who treat breast cancer patients have used for over 30 years to detect breast cancer earlier in asymptomatic women and thus decrease their risk of dying of breast cancer through early intervention. We have always known, however, that mammography is an imperfect tool. Oddly enough, its imperfections come from two different directions. On the one hand, in women with dense breasts its sensitivity can be maddeningly low, leading it to miss breast cancers camouflaged by the surrounding dense breast tissue. On the other hand, it can be “too good” in that it can diagnose cancers at a very early stage.

Early detection isn’t always better

While intuitively such early detection would seem to be an unalloyed Good Thing, it isn’t always. Although screening for early cancers appears to improve survival, the phenomenon of lead time bias can mean that detecting a disease early only appears to improve survival even if earlier treatment has no impact whatsoever on the progression of the disease. Teasing out a true improvement in treatment outcomes from lead time bias is not trivial. Part of the reason why early detection might not always lead to improvements in outcome is because of a phenomenon called overdiagnosis. Basically, overdiagnosis is the diagnosis of disease (in this case breast cancer but it is also an issue for other cancers) that would, if left untreated, never endanger the health or life of a patient, either because it never progresses or because it progresses so slowly that the patient will die of something else (old age, even) before the disease ever becomes symptomatic. Estimates of overdiagnosis due to mammography have been reported to be as high as one in five or even one in three. (Remember, the patients in these studies are not patients with a lump or other symptoms, but women whose cancer was detected only through mammography!) Part of the evidence for overdiagnosis includes a 16-fold increase in incidence since 1975 of a breast cancer precursor known as ductal carcinoma in situ, which is almost certainly not due to biology but to the introduction of mass screening programs in the 1980s.

As a result of studies published over the last few years, the efficacy of screening mammography in decreasing breast cancer mortality has been called into question. For instance, in 2012 a study in the New England Journal of Medicine (NEJM) by Archie Bleyer and H. Gilbert Welch found that, while there had been a doubling in the number of cases of early stage breast cancer in the 30 years since mass mammographic screening programs had been instituted, this increase wasn’t associated with a comparable decrease in diagnoses of late stage cancers, as one would expect if early detection was taking early stage cancers out of the “cancer pool” by preventing their progression. That’s not to say that Bleyer and Welch didn’t find that late stage cancer diagnoses decreased, only that they didn’t decrease nearly as much as the diagnosis of early stage cancers increased, and they estimated the rate of overdiagnosis to be 31%. These results are in marked contrast to the promotion of mammography sometimes used by advocacy groups. Last year, the 25 year followup for the Canadian National Breast Screening Study (CNBSS) was published. The CNBSS is a large, randomized clinical trial started in the 1980s to examine the effect of mammographic screening on mortality. The conclusion thus far? That screening with mammography is not associated with a decrease in mortality from breast cancer. Naturally, there was pushback by radiology groups, but their arguments were, in general, not convincing. In any case, mammographic screening resulted in decreases in breast cancer mortality in randomized studies, but those studies were done decades ago, and treatments have improved markedly since, leaving open the question of whether it was the mammographic screening or better adjuvant treatments that caused the decrease in mortality from breast cancer that we have observed over the last 20 years.

Given that it’s been a while since I’ve looked at the topic (other than a dissection of well-meaning but misguided mandatory breast density reporting laws a month ago), I thought now would be a good time to look at some newer evidence in light of the publication of a new study that’s producing familiar headlines, such as “Mammograms may not reduce breast cancer deaths“.

Here we go again.

An ecological study of mammography and breast cancer

The study that produced a brief flurry of the usual headlines last week was published in JAMA Internal Medicine a week ago by Harding et al, “Breast Cancer Screening, Incidence, and Mortality Across US Counties“. It was a collaborative effort from doctors Seattle, Harvard University, and Dartmouth. One disclosure that I have to make here is that one of the authors is H. Gilbert Welch, whose studies I (and others) have discussed here before on multiple occasions. Back then, however, I had had little contact with him. Now, however, I must disclose that I am working on a brief commentary on cancer screening with him and another coauthor. However, I had nothing to do with the study under discussion. Thus endeth the disclosure.

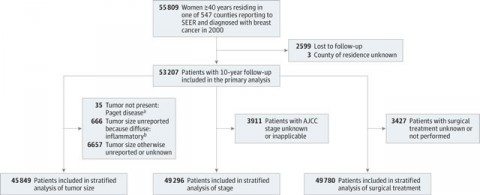

So what did the study do? In concept, the study is very simple in design, although carrying out such a study is devilishly difficult. To boil down the concept of the study to a single question, the investigators asked whether differences in rates of screening mammography correlate with differences in breast cancer mortality. In other words, does a high frequency of screening correlate with lower breast cancer mortality and vice-versa? To accomplish this, the authors used the Surveillance, Epidemiology, and End Results (SEER) database to perform an ecological study of 16 million women aged 40 or older who resided in 547 counties reporting to the database. The study covered a period from January 1, 2000 to December 31, 2010 and included 16 million women, of which 53,207 were diagnosed with breast cancer and followed for the next ten years, a population that is drawn from approximately one quarter of the US population. The extent of screening in each county was assessed as the percentage of included women who underwent screening mammography within the last two years. The main outcomes were breast cancer incidence in 2000 and incidence-based breast cancer mortality during the ten year followup. These were calculated for each county and age-adjusted to the US population. Incidence-based mortality was chosen as the endpoint because it excludes cancers diagnosed before the study period and is insensitive to overdiagnosis.

Here is the schema for how subjects were chosen:

The not-unexpected, and unexpected results

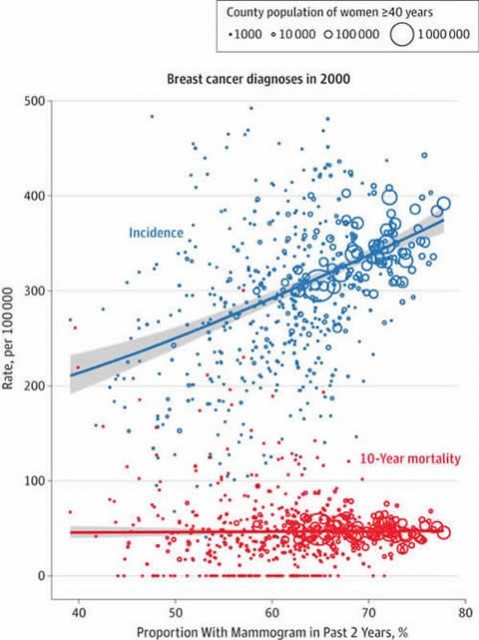

So what were the results? Not unexpectedly, there was a positive correlation between the extent of screening and breast cancer incidence. This is not in the least bit surprising, and, in fact, I would have been surprised if this result was not found. Basically, an absolute increase of 10 percentage points in the extent of screening was associated with 16% more breast cancer diagnoses. This increase in detection of breast cancer, unfortunately, was not associated with a decline in breast cancer incidence-based mortality. A picture is, as they say, worth 1,000 words, and here’s the “money” figure, so to speak:

Notice how there is a correlation between incidence and increasing percentage of women 40 and older who received mammography during the previous two years, but there is no correlation between the percent screened and 10-year incidence-based mortality from breast cancer. I realize that this looks like what we sometimes call a “star chart,” but for population-level data like this the correlation between percent screened is striking; equally striking is the lack of correlation between percent screened and mortality.

Next, the authors stratified the data based on tumor size, to determine whether screening had more of an effect on small versus large tumors, the cutoff being 2 cm in diameter. The reason that cutoff was likely chosen is because tumors less than 2 cm in diameter are considered stage I (assuming negative axillary lymph nodes), while tumors greater than 2 cm are at least stage II. Consistent with the results reported in the 2012 NEJM study, the effect of mammography was more marked on smaller tumors, with a 10% increase in mammography screening associated with a 25% increase in the incidence of small tumors and only a 7% increase in the incidence of larger tumors. Of note, no decrease in the incidence of larger tumors was observed associated with screening mammography, again contrary to what one would predict based on the hypothesis that early detection prevents the presentation of disease at later stages because, or so it is presumed, early detection results in treatment of early stage tumors that decreases the number of tumors that make it to later stages before being detected.

Finally, the authors looked at disease stage and extent of surgery. The reason they did this is because one rationale for mammography is that, even if early detection doesn’t have much of an effect on incidence-based mortality, at least treating such cancers earlier allows women to undergo less invasive, less aggressive therapy. Here the results are somewhat mixed. The authors reported that a 10 percentage point increase in screening is associated with an increased incidence of early disease (stages 0-II) but no change in the incidence of locally advanced and metastatic disease (stages III and IV). That’s consistent with what they found in their first analysis and what Welch found in 2012. The authors also found that a 10 percentage point increase in screening is indeed associated with more breast-conserving surgical procedures (lumpectomy) but that there is no concomitant reduction in mastectomies. In other words, putting it all together, I’d describe this result as finding that all the additional breast-conserving surgery went towards the excess early stage cancer found by screening.

The authors conclude:

For the individual, screening mammography should ideally detect harmful breast cancers early, without prompting overdiagnosis. Therefore, screening mammography ideally results in increased diagnosis of small cancers, decreased diagnosis of larger cancers (such that the overall risk of diagnosis is unchanged), and reduced mortality from breast cancer. Across US counties, the data show that the extent of screening mammography is indeed associated with an increased incidence of small cancers but not with decreased incidence of larger cancers or significant differences in mortality. In addition, although it has been hoped that screening would allow breast-conserving surgical procedures to replace more extensive mastectomies, we saw no evidence supporting this change.

And ask:

What explains the observed data? The simplest explanation is widespread overdiagnosis, which increases the incidence of small cancers without changing mortality, and therefore matches every feature of the observed data. Indeed, our cross-sectional findings are supported by the prior longitudinal analyses of Esserman et al29 and others,30,31 in which excess incidences of early-stage breast cancer were attributed to overdiagnosis. However, four alternatives are also logically possible: lead time, reverse causality, confounding, and ecological bias.

The authors do a convincing job of arguing that three out of four of these are not a major factor. For instance, lead time bias can become an issue in counties where screening had recently increased as cancer diagnoses are advanced in time, as they describe in the supplement:

Tumors in screened women are diagnosed earlier than tumors in unscreened women. It is not known exactly how much advanced notice (lead time) is provided by mammography screening. If mammography screening results in long lead times, then breast cancer incidence should increase when more women are screened. For example, suppose a woman receives her first screening today, and the screening detects a breast tumor that otherwise would not have been detected for another two years. Accordingly, the incidence of breast cancer would be increased today, and would also be decreased two years from now.

By examining correlations between screening frequency in counties at different time points in counties that had recently increased their rates of screening, they failed to find an effect that could be attributed to lead time bias. Using additional analyses, they were able to counter criticisms that the results they observed were due to confounders, such as differing incidences of breast cancer in various counties that might lead to increased emphasis on screening (reverse causality) or because risk factors for breast cancer happen to be associated with screening.

Epidemiology and the “ecological fallacy”

But what about the ecological fallacy? In epidemiology, the word “ecological” has quite a different meaning than it does in biology or every day colloquial usage. Most medical studies are done at the level of the individual. In other words, data are gathered for each individual, such as risk factors, diagnosis, age and other demographic factors, treatment, and outcome; the individual is the unit of analysis. In contrast, in an ecological study, data are collected at the group level, and the group is the unit of analysis. In the current study, data were collected at the county level and the unit of analysis was the population of each county assessed. This can be a problem.

The term “ecological fallacy” was first coined by the sociologist H.C. Selvin in 1958, but the perils of imputing correlations found in groups to the individuals composing them have been known at least since 1938, when psychologist Edward Thorndike published a paper entitled, “On the fallacy of imputing the correlations found for groups to the individuals or smaller groups composing them”. Basically, relative risks detected by ecological analyses can be magnified upwards by many-fold, the cause being a greater sensitivity to problems such as model misspecification; confounding; non-additivity of exposure and covariate effects (effect modification); exposure misclassification; and non-comparable standardization.

As the authors point out in their supplement, ecological biases arise because values that are identified for individuals are aggregated before analysis, resulting in a loss of information, particularly when the aggregation takes place at a larger scale. They also concede that ecological biases can’t be completely ruled out without access to individual-level data, but they did their best, taking note that one warning sign of ecological bias is seen when the results of an analysis vary based on the population of each county analyzed. Counties with larger populations would be expected to show more ecological bias than counties with smaller populations, but this was not seen. As the authors point out, similar relationships between screening and incidence were found regardless of whether the counties included 5,000, 50,000, or 500,000 women, and there was no significant relationship between screening and 10-year mortality from breast cancer in any of them. While this analysis doesn’t rule out ecological biases (again, only access to individual-level data can do that), it does reassure that there were no obvious warning signs that such a bias was creeping in. The authors also note that, in essence, you can’t get away from ecological analyses when trying to estimate rates of overdiagnosis, because “overdiagnosis is currently not observable in individuals, only in populations,” which is quite true, citing a recent comparison of methods for estimating overdiagnosis that concluded that “well conducted ecological and cohort studies in multiple settings are the most appropriate approach for quantifying and monitoring overdiagnosis in cancer screening programs.”

A usual suspect chimes in

Not unexpectedly, a radiologist whom we’ve met before chimed in almost immediately in his usual inimitable fashion. I’m referring, of course, to Dr. Daniel Kopans, Director of Breast Imaging at Massachusetts General Hospital and outspoken—to say the least!—mammography advocate. Predictably, he didn’t take long at all to come up with one of his bombast-filled criticisms that he is so well-known for in which he imputes dishonest motives to those with whom he has a scientific disagreement, a character trait that both amuses and annoys because he himself is so sensitive to criticism. In this case, his attack is even more off-base than usual. Indeed, I’m going to go straight to the paragraph in his response to Harding et al that shows that Dr. Kopans almost completely missed the point of Harding et al:

One also has to wonder what the authors have done with the data. Between 2000 and 2010 (10 years of follow-up), the SEER breast cancer death rate fell from 26.6 deaths per 100,000 women to 21.9 deaths per 100,000 women — a decline of 18% (almost 2% per year), yet the authors claim there was no decline in deaths over the same period using SEER data. Something is wrong. This should have been recognized by the peer review and the fundamental discrepancy addressed.

Puzzled by this particular criticism, I actually looked through the paper again to find where the authors made the claim that breast cancer mortality had not decreased from 2000 to 2010. I failed to find it. In any event, what Dr. Kopans is criticizing is not the analysis the authors did. They did not look at whether the death rate from breast cancer fell over the ten years of the study. What they did was to look at one year! That year was 2000. They then analyzed the rates of mammography uptake in each county for that year and compared them with the ten year mortality. I mean, bloody hell! It’s right there in the abstract:

Breast cancer incidence in 2000 and incidence-based breast cancer mortality during the 10-year follow-up. Incidence and mortality were calculated for each county and age adjusted to the US population.

The primary measure was not breast cancer mortality over time, so there was no reason to point out that breast cancer mortality declined between 2000 to 2010. But they did anyway. In the supplemental data, the authors did note that breast cancer mortality has been declining, observing, “As breast cancer mortality has been decreasing since 1990,4 there is no reason to suspect that breast cancer mortality in future years will exceed breast cancer mortality in 2000.” In any case, in this study the primary measure was ten year mortality of a cohort from a single year, the year 2000, who were diagnosed with breast cancer in that year. That’s why the SEER database had to be examined to 2010. Thus, this particular criticism by Dr. Kopans is irrelevant to the study and is so egregiously wrong that it is tempting to use it to invalidate everything else he wrote.

Hints, allegations, and things that should have been left unsaid

Also note how, here and throughout the article, Dr. Kopans insinuates that the authors did something dishonest. He’s perhaps a little less blatant about insinuating scientific fraud than he used to be (perhaps he learned his lesson from his previous similar insinuations about the CBNSS), but to Dr. Kopans, the authors aren’t just wrong, but dishonest or at least so ideologically motivated that they don’t care. Don’t believe me? Look at his very first paragraph:

The ploy used by Harding et al in their new study is classic. Explain why using your approach is scientifically unsupportable, and then go ahead and use it claiming there is no alternative.

Also note the part where he claims that studies like this are “being used to reduce access to screening,” even going so far as to put the word “studies” in scare quotes. This is a common theme in Dr. Kopans’ oeuvre with respect to mammography, as well as that of various radiology groups: That there is a coordinated attack by anti-mammography ideologues out there who want to take away women’s access to mammography and don’t care that (if you believe Kopans’ claims) thousands will die unnecessarily as a result. Indeed, I frequently quote Dr. Kopan’s famous statement about the 2012 Bleyer and Welch NEJM study:

This is simply malicious nonsense,” said Dr. Daniel Kopans, a senior breast imager at Massachusetts General Hospital in Boston. “It is time to stop blaming mammography screening for over-diagnosis and over-treatment in an effort to deny women access to screening.”

Here’s what he said after the USPSTF task force recommended against routine mammographic screening of 40-49 year old women in 2009:

Asked for a possible reason why the task force would ignore expert opinion, Dr. Kopans told Medscape Radiology that there is a nucleus of people who have long been opposed to mammography.

“I just got some updated information that they were involved with the task force. I hate to say it, it’s an ego thing. These people are willing to let women die based on the fact that they don’t think there’s a benefit.”

One notes that if “they” don’t think there’s a benefit, then “they” don’t think they’re consigning thousands of women per year to die, as Dr. Kopans does. Consistent with these positions, Dr. Kopans goes on the attack again in his response to this most recent study:

The claim of massive overdiagnosis of invasive cancers has been manufactured. No one has ever seen an invasive breast cancer disappear on its own, yet it has been claimed there are tens of thousands each year. When we looked directly at patient data, we found that more than 70% of the women who died of breast cancer in major Harvard Medical School teaching hospitals were among the 20% of women who were not participating in screening.3

Note the word choice: “Manufactured.” Once again, he is imputing dishonest—or at least ideologically biased—motives to those with whom he disagrees.

What Dr. Kopans won’t admit is that the result of the study he cites has numerous weaknesses, in particular a bias towards younger women with more aggressive cancers (not unexpected in a tertiary care hospital) who, not surprisingly, would be less likely to have been screened, as well as the simple observation that, by definition, overdiagnosis can’t occur in women who die of breast cancer. Be that as it may, Dr. Kopans concludes:

Enough is enough. The effort to reduce access to screening has been relentless by a small group that has continued to use specious arguments and scientifically flawed analyses to support their agenda. At some point, peer reviewers need to read more carefully and stop the publication of scientifically unsupportable and misleading material.

My retort is that, at some point, scientists need to call out Dr. Kopans for so frequently assuming that those who disagree with him do so out of less than honorable motives. I don’t even make that assumption for most leaders of the antivaccine movement, who, though profoundly wrong, are motivated by what they mistakenly believe to be an evil that must be opposed. I realize that Dr. Kopans really does appear to believe that those who do studies that conclude that mammography might not be as effective at preventing deaths from breast cancer as he believes it to be are monsters willing to lie and twist science to deny women mammography, but such is not the case. For example, does Dr. Kopans really think that the authors of the CNBSS wanted their study to find that screening did not save lives? Of course they didn’t! Scientists don’t start large, expensive, multi-institutional studies hoping for a negative result! They just don’t! They’re too much work and require too many resources. Worse, any time Dr. Kopans is called out for assuming the worst about physicians and scientists with whom he disagrees, he disingenuously assumes an attitude of, “Who me? I never accused anyone of that.”

One also can’t help but notice that in their paper Harding et al bend over backwards to be clear and up front about the limitations of their study, particularly the ecological fallacy. That’s just good science. It’s also what Dr. Kopans turns into a weapon to try to discredit the entire study. Finally, the usual error that results from the ecological fallacy is the tendency to find associations that don’t exist or to overestimate associations that do exist. Consequently, the most probable error in Harding et al is to have overestimated the association between screening and breast cancer diagnoses, which makes the negative finding with respect to mortality stand out all the more.

The bottom line: Breast cancer screening is not simple

It must be re-emphasized that Harding et al is just one study among many. Although it reinforces the growing consensus that mammographic screening leads to significant overdiagnosis, it is nowhere near the final word. Indeed, there is a much more measured commentary on the study published alongside it by Joann G. Elmore, MD, MPH and Ruth Etzioni, PhD at the Fred Hutchinson Cancer Research Center. Elmore and Etzioni express proper caution at interpreting the results of ecological studies, noting:

Most scientists now acknowledge that there is some level of overdiagnosis in breast cancer screening, but the frequency of overdiagnosis has not been conclusively established. Estimates in the literature cover a frustratingly broad range, from less than 10% to 50% or more of breast cancer diagnoses. Published studies of overdiagnosis vary widely not only in their results but also in their populations, methods, and measures.4 In practice, the frequency of overdiagnosis is likely quite different for DCIS and invasive tumors.

Sadly, we are left in a conundrum. Women will increasingly approach their physicians with questions and concerns about overdiagnosis, and we have no clear answers to provide. We do not know the actual percentage of overdiagnosed cases among women screened, and we are not able to identify which women with newly diagnosed DCIS or invasive cancer are overdiagnosed. Many screening guidelines now mandate shared and informed decision making in the patient-physician relationship, but this is not an easy task.

Indeed, it is not, particularly when data conflict. For example, just last month, the World Health Organization through its International Agency for Research on Cancer (IARC) published new recommendations on screening mammography. After considering older randomized clinical trials and voicing some skepticism over whether they are still relevant, the IARC examined evidence from 20 cohort and 20 case-control studies, all conducted in the developed world (Australia, Canada, Europe, or the United States) that they considered informative for evaluating the effectiveness of mammographic screening programs. Based on its analysis, the IARC notes that screening mammography could be associated with as much as a 40% reduction in the risk of dying from breast cancer and concluded:

After a careful evaluation of the balance between the benefits and adverse effects of mammographic screening, the working group concluded that there is a net benefit from inviting women 50 to 69 years of age to receive screening. A number of other imaging techniques have been developed for diagnosis, some of which are under investigation for screening. Tomosynthesis, magnetic resonance imaging (MRI) (with or without the administration of contrast material), ultrasonography (handheld or automated), positron-emission tomography, and positron-emission mammography have been or are being investigated for their value as supplementary methods for screening the general population or high-risk women in particular.

However, the IARC also cautioned about the risks of mammography, including overdiagnosis. Interestingly, its estimate of overdiagnosis, ranging from 4-11%, was lower than most I have seen.

Unfortunately, there remains a large contingent of radiologists who, like Dr. Kopans, believes that overdiagnosis either does not exist or is such a small problem as not to be worth the consideration it is currently receiving. Fortunately, that appears to be changing. For instance Academic Radiology‘s 2015 issue will center around the theme of overdiagnosis, and several of the articles have already been published electronically ahead of release of the issue, such as this one by Archie Bleyer updating Bleyer and Welch’s 2012 NEJM article, a commentary by H. Gilbert Welch,” Responding to the Challenge of Overdiagnosis“, and an article by Saurabh Jha, an academic radiologist, “Barriers to Reducing Overdiagnosis“, the last of whom notes that the first steps in reducing overdiagnosis are to admit that overdiagnosis occurs. I couldn’t have said it better myself and I might very well be blogging about these in the future.

Implications for primary care and beyond

It’s not just radiologists, either, who seem to have a problem with the concept of overdiagnosis. I’m frequently surprised at how few primary care physicians and even oncologists, both medical and surgical, “grok” the concepts of overdiagnosis, lead time bias, and length bias in screening for asymptomatic diseases, especially cancer. Examples of this misunderstanding sometimes even appear in the lay press; for example, this a doctor accusing the New York Times of “killing” his patient because it published news stories about studies questioning the value of PSA screening. It’s also true that the lay public assumes that early diagnosis is always better, damn the consequences. How are they to be educated if we physicians do not adequately understand the complexities and tradeoffs involved in screening ourselves?

Although Dr. Kopans has implied that I belong to the evil cabal of anti-mammography ideologues against whom he regularly does battle, I actually still recommend screening mammography for my patients 50 and over. The reason is simple. I still believe it offers benefits in terms of reducing the risk of dying from cancer, albeit benefits more modest than I used to believe. However, I also point out the potential harms now. For women aged 40-49, I’m much less dogmatic than I used to be. If there’s high risk due to family history or other factors, I will more strongly recommend screening, but if there isn’t I will tell them about screening, explaining the potential benefits and risks, and then more or less leave it up to the patient. Of course, you need to realize that most of my patients already have breast cancer or have had breast cancer, which makes them high risk for developing another breast cancer, even if they are under 50; I don’t see too many 40-something year old women just asking about screening.

We can overcome the problems of overdiagnosis and overtreatment due to cancer screening. Developing better screening tests will not be sufficient to achieve this end, however. What will be required is the development of predictive tests that tell us which lesions found on mammography or future screening tests are likely to progress within the patient’s lifetime to cause death or serious harm and which are unlikely to do so. Such information would allow us to stratify cancers into those that need to be treated promptly and those that can safely undergo “watchful waiting.” This will not be an easy task. In the meantime, we do the best we can with the data that we have—and its uncertainty.