Placebo effects help you feel better, they don’t make you actually better.

It is almost inevitable that whenever we post an article critical of the claims being made for a particular treatment, alternative philosophy, or alternative profession, someone in the comments will counter a careful examination of published scientific evidence with an anecdote. Their arguments boils down to, “It worked for me, so all of your scientific evidence and plausibility is irrelevant.”

Both components of this argument are invalid. Even if we grant that a treatment worked for one individual, that does not counter the (carefully observed) experience of all the subjects in the clinical trials. They count too – I would argue they count more because we can verify all the important aspects of their story.

I want to focus, however, on the first part of that statement, the claim that a treatment “worked” for a particular individual. Most people operationally define “worked” as, they took a treatment and then they improved in some way. This, however, is a problematic definition on many levels.

Placebo effects

Much of the disagreement about how to define “works” comes down to placebo effects. The generally-accepted scientific approach is to conclude that an intervention works when the desired effect is greater than a placebo. When all other variables are controlled for, the intervention as an isolated variable is associated with an improved outcome. That is the basic logic of a double-blind placebo controlled trial.

Proponents of “alternative” treatments that tend to fail such trials have turned to the argument that placebo effects should count also, not just effects in excess of placebo. They essentially argue, who cares if something works through placebo, as long as it works.

One problem is in the assumption that because one is feeling better, the treatment therefore worked. This is the post hoc ergo propter hoc logical fallacy. We do not know what the subject’s outcome would have been like had they not received the treatment, or if they received a different treatment.

In this way many placebo effects are just an illusion, not a real benefit.

Simple regression to the mean explains why this is likely. People will tend to seek treatment when their symptoms are at their worst, which means they are likely, by chance alone, to regress to the mean of the distribution of symptoms – or return to a less severe state, which will be interpreted as improvement.

This is further compounded by confirmation bias. Symptoms are often highly complex, variable, and subjective. What counts as “better” can be determined post hoc by whatever happens. For headaches, for example, there are many variables to consider – frequency, duration, severity, response to pain medication, the need for pain medication, level of disability, and a host of associated symptoms such as nausea, blurry vision, or mental fog.

All of these features of a headache can change, providing ample opportunity to cherry pick which outcome to consider. That is exactly why in a clinical trial the primary outcome measure has to be chosen prior to collecting data. This is also why we are suspicious of clinical trials that use multiple secondary outcome measures, and claim a positive study when one of those secondary measures is improved.

In other words – the natural course of a symptom or illness provides very noisy data. It does not make scientific sense to pick out only the positive effects from this distribution of data and declare that the treatment “worked” in those instances.

This is exactly like having an alleged psychic guess cards, and perform no better than chance but declare that for those random hits they did make their psychic power was working. You have to look at all the data to see if there was an effect.

This principle applies to medical interventions as well – you have to look systematically at all the data to see if there is an effect. Saying “well it worked for me” is exactly like saying that the psychic powers worked whenever they randomly hit, even though the overall pattern was negative (consistent with random guessing).

Another layer of randomness to the data which is then ripe for cherry picking and confirmation bias is trying multiple therapies for the same problem (in addition to the same therapy for multiple problems). For example, someone might take medication, acupuncture, chiropractic, and homeopathic remedies at the same time for their headaches, and if they improve credit one or more of the alternative treatments. Or they may try them in sequence, and whichever one they took when their symptoms improved on their own gets the credit due to the post hoc fallacy.

We intuitively ignore the failed treatments – the misses – and commit the lottery fallacy by asking the wrong question: what are the odds of my headache getting better shortly after taking treatment X. But the real question is – what are the odds of my headache getting better at any time, and that I would have recently tried some treatment.

There are also psychological factors in play. When people try an unconventional treatment, perhaps out of desperation or just the hope for relief, they may feel vulnerable to criticism or a bit defensive for trying something unorthodox and even a bit bizarre. There is therefore a huge incentive to justify their decision by concluding that the treatment worked – to show all the skeptics that they were right all along.

Then, mixed in with all of this, is a genuine improvement in mood, and therefore symptoms, from the positive attention of the practitioner (if there is one – i.e. you’re not taking an over-the-counter remedy), or just from the hope that relief is on the way and the feeling that you are doing something about your health and your symptoms. This is a genuine, but non-specific, psychological effect of receiving treatment and taking steps to have some control over your situation.

What is distressing to those of us who are trying to promote science-based medicine is that this latter factor is often treated as if it is the entire placebo effect, or at least a majority. The evidence, however, suggests that it is an extreme minority of the effect.

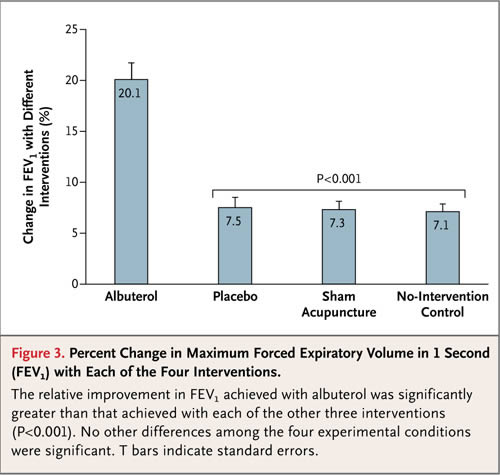

A recent study with asthma, for example, shows that the placebo effect for objective measurements of asthma severity was essentially zero. There was a substantial effect for subjective outcomes. So subjects reported feeling better even when objective measures showed they were no better. This sounds an awful lot like confirmation bias and other psychological factors, like expense/risk justification and the optimism bias.

Conclusion: Stories often triumph over statistics

Placebo effects are largely an illusion of various well-known psychological factors and errors in perception, memory, and cognition – confirmation bias, regression to the mean, post-hoc fallacy, optimism bias, risk justification, suggestibility, expectation bias, and failure to account for multiple variables. There are also variable (depending on the symptoms being treated) and subjective effects from improved mood and outlook.

Concluding from all of this that a treatment “worked”, when a treatment appears to be followed by improved symptoms, is like concluding that an alleged psychic’s power “worked” whenever their random guessing hits. This is why anecdotal experience is as worthless in determining if a treatment works as is taking the subjective experience of a target of a cold reading in determining if a psychic’s power is genuine.

Yet, even for many skeptics, the latter is more intuitive than the former. It is hard to shake the sense that if someone feels better than the treatment must have “worked” in some way.

The, “It worked for me,” gambit will likely always be with us, however. Stories are compelling, and none more than our own. It is just how our brains work. If we eat something and get nauseated, we will avoid that food in the future. If we take a treatment and feel better, the feeling that the treatment was the cause can be profound and hard to dismiss with dry data.

This is true even with treatments that have been proven effective. We cannot know in any individual case whether or not an intervention worked, because we cannot know what would have happened without the treatment. We can only make statistical statements based on clinical data.

Thinking statistically rather than anecdotally is not in the human comfort zone, however.

The “It Worked for Me” Gambit