Alternative medicine relies heavily on anecdotal evidence and personal experience, but it knows it won’t convince mainstream science unless it can provide scientific validation. It is quick to crow about positive results from scientific studies, often from studies in laboratory mice or Petri dishes, or from human studies that are poorly designed and may not even use a control group. Science-based medicine relies on evidence from clinical trials, especially those that are randomized, double-blind, and placebo-controlled. But that evidence can be misleading. The results of even the best studies are more likely to be wrong than right. Positive results from preliminary trials are all too often overturned by negative results from subsequent larger, better trials. Individual studies are often wrong, but that doesn’t mean we should ignore them. Science is a collaborative, self-correcting enterprise that can be relied on to come ever closer to reality over the course of time. A good rule of thumb is never to believe a single study, but to wait for replication, confirmation, and a consensus of all the available data. And to consider prior probability.

In my free YouTube video lecture course on science-based medicine, I talk about “Pitfalls in Research” in lecture 9. I have been asked to re-cycle the information in the course guide [PDF] for that lecture in the form of an SBM post because it provides valuable guidance for evaluating scientific studies.

Ioannidis showed that most research findings are false, especially in the case of:

- Small studies

- Small effect size

- Multiple endpoints

- Financial interests and bias

- Hot topic with more teams in competition

- Research on improbable areas of CAM

| Things to Keep in Mind: | Because: |

| Media reports can’t be trusted | Reporters often don’t understand the science and merely regurgitate press releases |

| Learn what can go wrong | The same flaws keep repeating |

| Never believe one study | All data pro and con must be considered |

| Prior probability matters | Basic science can’t be ignored |

Bausell’s quick test

In Snake Oil Science, Bausell provided this quick 4-point checklist:

- Is the study randomized with a credible control group?

- Are there at least 50 subjects per group?

- Is the dropout rate 25% or less?

- Was it published in a high-quality, prestigious, peer-reviewed journal?

(You can look up the journal’s impact factor)

If the answers to these questions are all Yes, that doesn’t guarantee that the study’s conclusions are credible, but if some of the answers are No, it makes the results more questionable.

Other questions to ask

| Was the study done on people or fruit flies? | Studies in animals and in vitro may not apply to humans. |

| Who are the subjects? | Are they representative? Could they be biased? |

| Who funded the study? | Could be a source of bias. |

| Could the authors have been biased? | Look for conflicts of interest. |

| Was randomization adequate? | Were the groups really comparable? |

| Was blinding adequate? | Was there an exit poll to see if they could guess whether they were in the test group or the control group? |

| Were there multiple endpoints? (This, and the next point, are known as “p-hacking“) | If so, were appropriate statistical corrections done? |

| Was there inappropriate data mining? | Sometimes the study is negative but the data are tortured until they seem to confess something positive. |

| Where was the study done? | Studies done in China are suspect because they never report negative results. |

| Were the results clinically meaningful? | Statistical significance ≠ clinical significance. |

| Did the results merit the conclusions? | Sometimes they don’t. |

| Were they studying something real? | Tooth Fairy science tries to study imaginary things like Tooth Fairies, acupoints, and human energy fields. |

| Was it a “pragmatic” real-world study? | Pragmatic studies are not meant to establish efficacy. An unproven treatment with strong placebo effects may appear to outperform a proven treatment. |

Other things that might go wrong

- Errors of math

- Using the wrong statistical test for the kind of data collected

- Contaminants

- Poor compliance

- Conscious or unconscious manipulation of data by technician

- Procedures not carried out properly

- Deliberate fraud

Important definitions to understand

| Statistically significant | Significant at p 0.5 ≠ Truth. It just measures the chance a repeat study will get positive results if the null hypothesis is true (no difference between groups). |

| Clinically significant | Changes disease outcome |

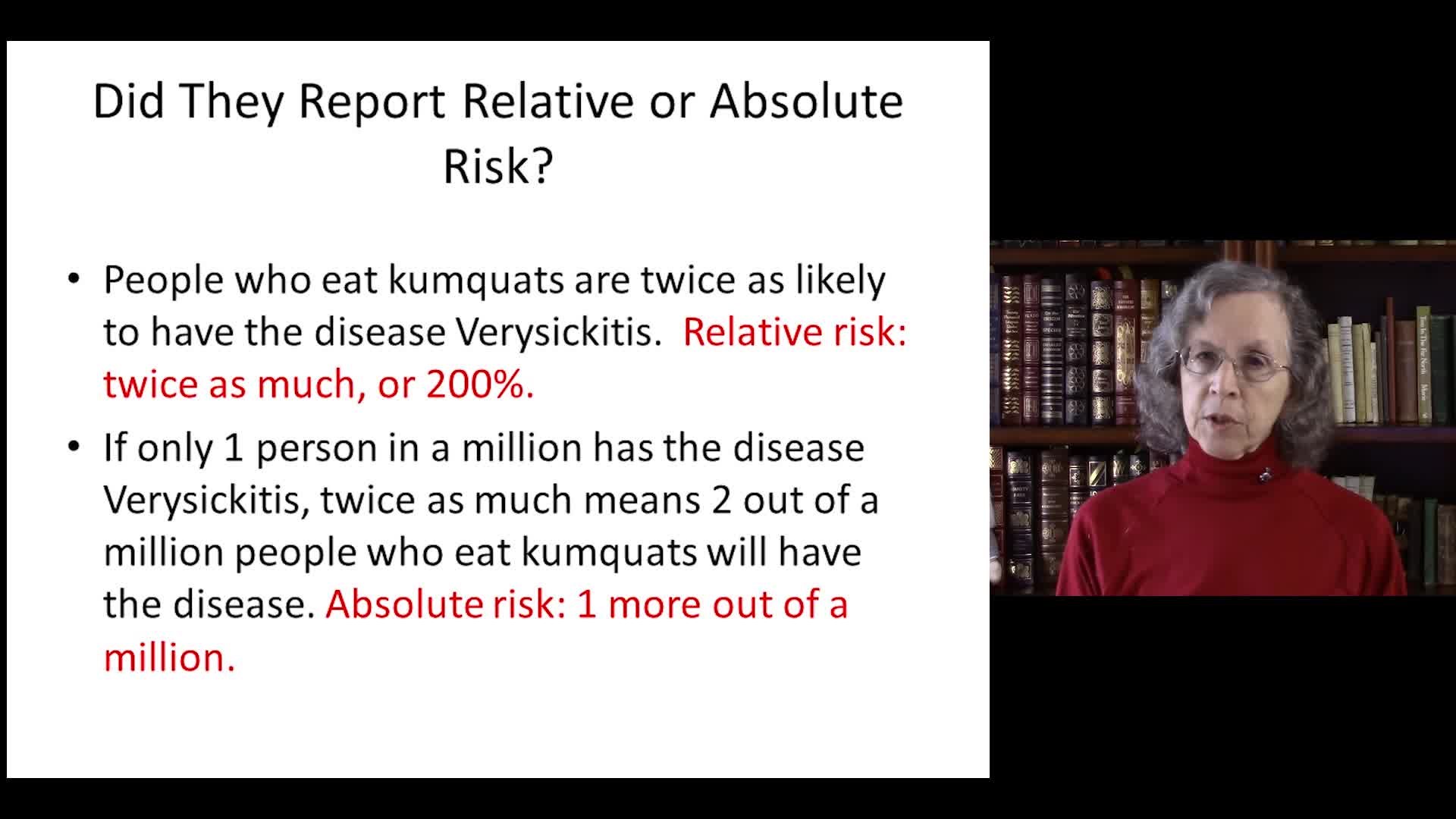

| Relative risk | Reports percentages |

| Absolute risk | Reports actual numbers of people |

| Number Needed to Treat (NNT) | Number Needed to Treat for one person to benefit |

| Number Needed to Harm (NNH) | Number Needed to Treat for one person to be harmed. |

| Confidence intervals | We can be confident the true value is somewhere in this range. |

| Correlation ≠ causation | Correlation = a statistical relationship between 2 variables. Doesn’t mean one causes the other. |

| Sensitivity of a test | Percentage of people with the disease who test positive. |

| Specificity of a test | Percentage of people who don’t have the disease who test negative. |

| Base rate | The prevalence in the population. The rarer the condition, the more false positive test results. |

| Positive Predictive Value (PPV) | The likelihood that a positive test is true: that you actually have the disease. |

| Negative Predictive Value (NPV) | The likelihood that a negative test is true: that you don’t have the disease. |

| Problems that need correcting | Solutions |

| · Poor quality studies

· Publish or perish · Publication bias · Lack of replication · Mistakes missed by peer review · Pay-to-publish journals · Big Pharma malfeasance

|

· Better education of researchers

· Quality control at journals · Publish replications and negative studies · Register all studies · Full disclosure · Improve media reporting

|

Science is far from perfect

John Rennie said, “There seems to be no study too fragmented, no hypothesis too trivial, no literature citation too biased or too egotistical, no design too warped, no methodology too bungled, no presentation of results too inaccurate, too obscure, and too contradictory, no analysis too self-serving, no argument too circular, no conclusions too trifling or too unjustified, and no grammar and syntax too offensive for a paper to end up in print.”

A warning

This warning statement should be attached to all research studies:

“Warning! Taking any action on the basis of this research could result in injury or death. The results described in this study have not been replicated and the long-term effects of this treatment are unknown. Past performance is no guarantee of future results. When subjected to further investigation, most published research findings turn out to be false.”

Two more things to remember

- My SkepDoc’s Rule: Never accept a claim until you understand who disagrees with it and why.

- Don’t ever forget to ask if you yourself might be biased.