Back in January I wrote about the COVID Anti-Vaxxer Playbook, the three key messages that antivaccine groups have coalesced around to destroy confidence in the COVID-19 vaccine. Anti-vaccine messaging on social media had exploded since the pandemic began, creating business opportunities not just for anti-vaxxers, but also for the major social media companies (YouTube, Facebook, Instagram, and Twitter) who profit from the activity, traffic, and sharing.

Back in January I wrote about the COVID Anti-Vaxxer Playbook, the three key messages that antivaccine groups have coalesced around to destroy confidence in the COVID-19 vaccine. Anti-vaccine messaging on social media had exploded since the pandemic began, creating business opportunities not just for anti-vaxxers, but also for the major social media companies (YouTube, Facebook, Instagram, and Twitter) who profit from the activity, traffic, and sharing.

In February, Steven Novella predicted the situation that is now emerging around the world – a race between getting vaccines into arms, and the rapid spread of new variants of SARS-CoV-2 that are more infectious and potentially more deadly. While the vaccine rollout in USA has accelerated, we are nowhere near herd immunity, and yet the USA is rapidly reaching the point where it will soon have the vaccine available to anyone that wants it. Whether those who are currently vaccine hesitant decide to take the vaccine may be an important factor in reaching herd immunity, which may need to be as high as 85-90% (vaccinated, or infected and survived). While vaccine hesitancy appears to be dropping, as of this week approximately 1 in 4 Americans will not take the vaccine if offered it.

A new report identified that just twelve anti-vaxxers are responsible for almost two-thirds of all anti-vaccine content on Facebook and Twitter. And the platforms continue to do as little as possible to remove this content. Removing this content and its creators from these platforms may help reduce (or at least stop the growth) of the anti-vaccine sentiment that exists.

Fostering vaccine hesitancy – A deliberate strategy

The Gallup poll reporting on vaccine hesitancy asked those reluctant for their reasons:

Asked for the main reason behind their reluctance to be immunized, those who are unwilling to receive a COVID-19 vaccine are most likely to say they say they want to wait and confirm it is safe (23%) or do not think the health effects from the disease would be serious if they contracted it (20%). Additionally, 16% are concerned about the speed with which the vaccine was developed, 15% do not trust vaccines in general, 10% say they already have COVID-19 antibodies due to having the disease, 9% are concerned about having an allergic reaction to the shot and 7% prefer to wait and see how effective it is in preventing the disease.

This seems consistent with the unified anti-vaccine messaging that major anti-vaccine groups and voices have been pushing, which I described back in January:

- COVID-19 is not dangerous: There are few deaths, and death reports are exaggerated. “It’s just the flu.” “It will just go away on its own.”

- COVID-19 vaccines are dangerous: “The vaccines were rushed.” “The vaccines are killing people.” “Natural” immunity is better. “Vaccines are toxic.”

- Vaccine advocates cannot be trusted: “It’s politics over health.” “It’s Big Pharma profits over health.”

With approximately 25% of Americans unwilling to accept the vaccine, and the variants surging, a massive vaccine education effort is underway. And while it’s good to see that confidence in the vaccine program itself is growing, more steps need to be taken to counter the misinformation campaign that is in full swing. That’s where this new campaign to hold social media accountable comes from.

The “Disinformation Dozen”

The Center for Countering Digital Hate (CCDH), which published the Anti-Vax Playbook, recently published a new report that analyzes anti-vaccine messaging on social media. It identified 12 individuals it calls the “Disinformation Dozen” who they have calculated are responsible for up to 65% of the anti-vaccine content on Facebook and Twitter:

- Joseph Mercola

- Robert F. Kennedy, Jr.

- Ty and Charlene Bollinger

- Sherri Tenpenny

- Rizza Islam

- Rashid Buttar

- Erin Elizabeth

- Sayer Ji

- Kelly Brogan

- Christiane Northrup

- Ben Tapper

- Kevin Jenkins

The twelve were identified by looking at anti-vaccine content and its sources. All individuals had large numbers of followers, produced lots of anti-vaccine content, or whose growth (in terms of followers) was growing quickly. Over 812,000 posts were extracted from Facebook and Twitter in February and early March, and 65% of the content was attributable to these twelve individuals. Importantly and frustratingly, Facebook doesn’t address the source of the content and stop it there. If 12,000 people share a Mercola anti-vaccine article, then this is considered 12,000 individual shares of anti-vaccine messaging attributed each of those 12,000 people, not 12,000 shares of a single post attributed to Mercola.

Are the platforms acting?

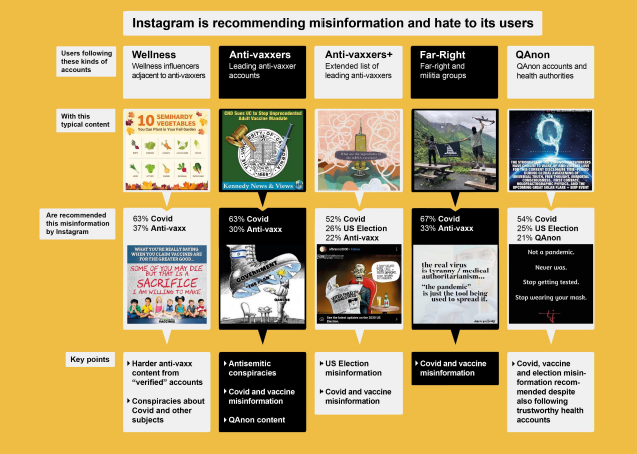

CCDH notes that the current algorithms that serve up suggested posts are spreading vaccine misinformation. In particular, Instagram has been audited and it observed that followers of leading anit-vaxxers would be automatically served more anti-vaccine content, focusing on COVID-19 misinformation and conspiracy theories, in addition to antisemitic content and posts on QAnon. Following “wellness influencers” with links to the anti-vaccine movement will also lead to suggested posts with increasing amounts of antivaccine and COVID-19 misinformation. Even following a mix of trusted health authorities and anti-vaccine pages means that users will eventually be recommended anti-vaccine content:

CCDH made the following recommendations, which have yet to be acted on by Facebook/Instagram:

- do not let those that spread anti-vaccine messages to gain “verified” status

- exclude posts about COVID-19 or vaccines from recommendations

- blacklist accounts that spread misinformation

- limit the number of suggested posts that a user is exposed to

The impact of algorithms featured in this week’s grilling of tech CEOs by Congressional House Energy and Commerce subcommittees, where questions about COVID-19 misinformation were raised. Congressman Mike Doyle challenged the CEOs to deplatform the CCDH’s dozen, in a pointed exchange:

The CBC television show Marketplace recently partnered with CCDH in an investigation of the social media giants. In February they reported more than 800 posts on Facebook, Instagram, YouTube and Twitter. These posts had a combined 1.5 million likes and 120,000 comments. Not coincidentally, the posts centred on the three key anti-vaccine messages. Despite the reporting of this content, only 12% of the posts were removed, but that number jumped to 53% after Marketplace journalists identified themselves directly to the platforms. Twitter and YouTube took the least action on posts with anti-vaccine content.

On a more positive note, the social media platforms have just recently removed some of the social media accounts of the Disinformation Dozen. Notably, Robert F. Kennedy Jr.’s Instagram account (almost a million followers) has been deleted, but he still maintains a Facebook page for himself and his organization, “Children’s Health Defense”. Rashid Buttar (#6 on the list) has also seen his public Facebook page and Instagram page deleted. But no-one has lost all their platforms, and all continue to have access to at least one major social media platform to spread disinformation.

Deplatforming and removing misinformation

It takes only a short exposure to anti-vaccine messaging to create hesitancy. Social media platforms have failed in this pandemic to remove misinformation and anti-vaccine messaging that can create this hesitancy. CCDH’s work has demonstrated that a large amount of this content can be attributed to a small number of individuals. Until these platforms take stronger and more effective measures, starting perhaps with removing the “Disinformation Dozen” and improving the algorithms to reduce the spread of this content, then social media will continue to be a problem in countering anti-vaccine sentiment and COVID-19 vaccine uptake.