“Early detection of cancer saves lives.”

How many times have you heard this statement or something resembling it? It’s a common assumption (indeed, a seemingly common sense assumption) that detecting cancer early is always a good thing. Why wouldn’t it always be a good thing, after all? For many cancers, such as breast cancer and colon cancer, there’s little doubt tha early detection at the very least makes the job of treating the cancer easier. Also, the cancer is detected at an earlier stage almost by definition. But does earlier detection save lives? This question, as you might expect, depends upon the tumor, its biology, and the quality and cost of the screening modality used to detect the cancer. Indeed, it turns out that the question of whether early detection saves lives is a much more complicated question to answer than you probably think, a question that even many doctors have trouble with. It’s also a question that can be argued too far in the other direction. In other words, in the same way that boosters of early detection of various cancers may sometimes oversell the benefits of early detection, there is a contingent that takes a somewhat nihilistic view of the value of screening and argues that it doesn’t save lives.

A corrollary of the latter point is that some boosters of so-called “alternative” medicine take the complexity of evaluating the effect of early screening on cancer mortality and the known trend towards diagnosing earlier and earlier stage tumors as saying that our treatments for cancer are mostly worthless and that the only reason we are apparently doing better against cancer is because of early diagnosis of lesions that would never progress. Here is a typical such comment from a frequent commenter whose hyperbolic style will likely be immediately recognizable to regular readers here:

Most cancer goes away, or never progresses, even with NO medical treatment. Most people who get cancer never know it. At least in the past, before early diagnosis they never knew it.

Now many people are diagnosed and treated, and they never get sick or die from cancer. But this would have also been the case if they were never diagnosed or treated.

Maybe early diagnosis and treatment do save the lives of a small percentage of all who are treated. Maybe not. We don’t know.

As is so often the case with such simplistic black and white statements, there is a grain of truth buried under the absolutist statement but it’s buried so deep that it’s well-nigh unrecognizable. Because we see this sort of statement frequently, I thought it would be worthwhile to discuss some of the issues that make the reduction of mortality from cancer so difficult to achieve through screening. I will do this in two parts, although the next part may not necessarily appear next week

Shortly after I learned that Elizabeth Edwards’ breast cancer had recurred in her bones last spring, meaning that her cancer is now stage IV and incurable, I read for our journal club a rather old article. However, this old article still has a lot of resonance today; indeed it was eerily prescient given the technological leaps that have driven the development of ever more sensitive imaging instruments and other diagnostic tests that have occurred over the last 15 years. The article, written by William C. Black and H. Gilbert Welch and entitled Advances in Diagnostic Imaging and Overestimations of Disease Prevalence and the Benefits of Therapy, appeared in the New England Journal of Medicine in 1993, but could easily have been written today. All you’d have to do is to substitute some of the imaging modalities mentioned in the article, and it would be just as valid now, if not more so. Until someone writes a better one, this article should be required reading for all physicians and medical students.

The article begins by setting the stage with the essential conflict, which is that increasing sensitivity leads to our detecting abnormalities that may never progress to disease:

Over the past two decades a vast new armamentarium of diagnostic techniques has revolutionized the practice of medicine. The entire human body can now be imaged in exquisite anatomical detail. Computed tomography (CT), magnetic resonance imaging (MRI), and ultrasonography routinely “section” patients into slices less than a centimeter thick. Abnormalities can be detected well before they produce any clinical signs or symptoms. Undoubtedly, these technological advances have enhanced the physician’s potential for understanding disease and treating patients.

Unfortunately, these technological advances also create confusion that may ultimately be harmful to patients. Consider the case of prostate cancer. Although the prevalence of clinically apparent prostate cancer in men 60 to 70 years of age is only about 1 percent, over 40 percent of men in their 60s with normal rectal examinations have been found to have histologic evidence of the disease. Consequently, because the prostate is studied increasingly by transrectal ultrasonography and MRI, which can detect tumors too small to palpate, the reported prevalence of prostate cancer increases. In addition, the increased detection afforded by imaging can confuse the evaluation of therapeutic effectiveness. As the spectrum of detected prostate cancer becomes broader with the addition of tumors too small to palpate, the reported survival from the time of diagnosis improves regardless of the actual effect of the new tests and treatments.

In this article, we explain how advances in diagnostic imaging create confusion in two crucial areas of medical decision making: establishing how much disease there is and defining how well treatment works. Although others have described these effects in the narrow context of mass screening6,7 and in a few clinical situations, such as the staging of lung cancer, these consequences of modern imaging increasingly pervade everyday medicine. Besides describing the misperceptions of disease prevalence and therapeutic effectiveness, we explain how the increasing use of sophisticated diagnostic imaging promotes a cycle of increasing intervention that often confers little or no benefit. Finally, we offer suggestions that may minimize these problems.

Since 1993, CT and MRI scans have now become so powerful that they now routinely “section” people into “slices” much thinner than 1 cm, making currently achievable imaging sensitivity considerably higher than it was 14 years ago. What the essential conflict is, at least in the case of cancer, is that far more people have malignant changes in various organs as they get older than the number of people who actually ever develop clinically apparent cancer. The example of prostate cancer is perhaps the best example of this phenomenon. If you look at autopsy series of men who died at an age greater than 80, the vast majority (60-80%) of them will have detectable microscopic areas of prostate cancer if their prostates are examined closely enough. Yet, obviously prostate cancer didn’t kill them. After all, they all lived to a ripe old age and died either of old age or a cause other than prostate cancer. In other words, they died with early stage cancer but not of cancer.

Now, imagine if you will, that a test was invented that was 100% sensitive and specific for detecting prostate cancer cells and that, moreover, it could detect microscopic foci of prostate cancer less than 1 mm in diameter. Now imagine applying this test to every 60 year old man. Somewhere around 40% of them will register a positive result, even though only around only 1/40 of those apparent positives would actually have disease that needs any treatment. Yet, they would all get biopsies. Many of them would get radiation and/or surgery simply because we can’t take the chance, or because, in our medical legal climate, watchful waiting and observation to see if it is going to grow at a rate that would make it clinically apparent in the case of potential cancer are a very hard sell, even when they’re the correct approach. After all, we don’t know which of them has disease that actually will threaten their lives. It may well be that eventually using expression profiling (a.k.a. gene chip) testing, something that did not exist in 1993, will eventually allow us to sort this question out, but in the meantime we have no way of doing so. Even so, I note that there is nonetheless an increasing trend towards “watchful waiting” rather than aggressive surgery or radiation therapy in many cases of prostate cancer with less aggressive histology.

Of the most common diseases, the various forms of cancer are probably the diseases that are most likely to be overdiagnosed as our detection abilities, either through increasingly detailed imaging test or through blood tests, both of which are becoming ever more sensitive. Breast cancer is the other big example other than prostate, but I plan on holding off on that one until Part 2 of this series. So instead I’ll look at another example from the article, namely thyroid cancer. Thyroid cancer is fairly uncommon (although certainly not rare) among cancers, with a prevalence of around 0.1% for clinically apparent cancer in adults between ages 50 and 70. Finnish investigators performed an autopsy study in which they sliced the thyroids at 2.5 mm intervals and found at least one papillary thyroid cancer in 36% of Finnish adults. Doing some calculations, they estimated that, if they were to decrease the width of the “slices,” at a certain point they could “find” papillary cancer in nearly 100% of people between 50-70. This is not such an issue in thyroid cancer, which is uncommon enough that mass screening other than routine physical examination to detect masses is impractical, but for more common tumors it becomes a big consideration, which is why I will turn to breast cancer in the next post.

The bottom line is that the ever-earlier detection of many diseases, particularly cancer, is not necessarily an unalloyed good. As the detection threshold moves ever earlier in the course of a disease or abnormality (in the case of cancer, to ever smaller tumors all the way down to the level of clusters of cells), the apparent prevalence of the disease being screened for increases, and abnormalities that may never turn into the disease start to be detected at an increasing frequency.In other words, the signal-to-noise ratio falls precipitously. This has consequences. It leads, at the very minimum, to more testing and may lead us to treating abnormalities that may never result in disease that affects the patient, which at the very minimum leads to patient anxiety and at the very worst leads to treatments that put the patient at risk of complications and do the patient no good.

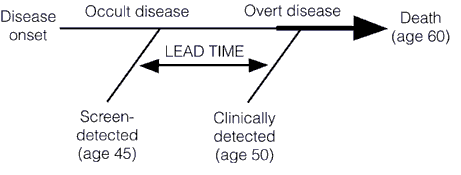

This earlier detection can also lead to an overestimation of the efficacy of treatment. That’s the grain of truth in the comment above. The reasons for this are two types of bias in treatment studies known as lead time bias and length bias. In the case of cancer, survival is measured from the time of diagnosis. Consequently, if the tumor is diagnosed at an earlier time in its course through the use of a new advanced screening detection test, the patient’s survival will appear to be longer, even if earlier detection has no real effect on the overall length of survival, as illustrated below:

Unless the rate of progression from the point of a screen-detected abnormality to a clinically detected abnormality is known, it is very difficult to figure out whether a treatment of the screen-detected tumor is actually improving survival when compared to tumors detected later. To do so, the lead time needs to be known and subtracted from the group with the test-based diagnoses. The problem is that the use of the more sensitive detection tests usually precede such knowledge of the true lead time by several years. The adjustment for lead time assumes that the screening test-detected tumors will progress at the same rate as those detected later clinically. However, the lead time is usually stochastic. It will be different for different patients, with some progressing rapidly and some progressing slowly. This variability is responsible for a second type of bias, known as length bias.

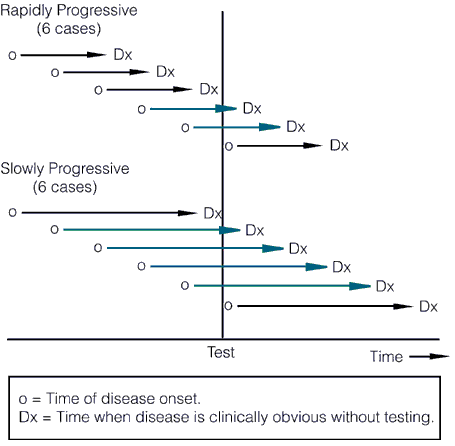

Length bias refers to comparisons that are not adjusted for rate of progression of the disease. The probability of detecting a cancer before it becomes clinically detectable is directly proportional to the length of its preclinical phase, which is inversely proportional to its rate of progression. In other words, slower-progressing tumors have a longer preclinical phase and a better chance of being detected by a screening test before reaching clinical detectability, leading to the disproportionate identification of slowly progressing tumors by screening with newer, more sensitive tests. This concept is illustrated below:

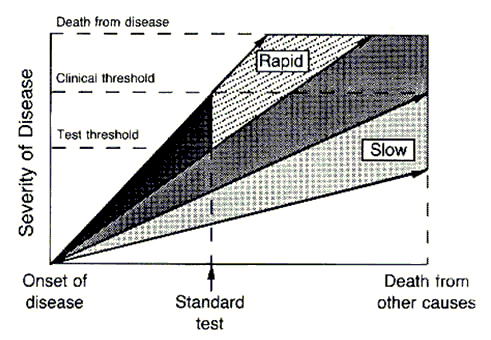

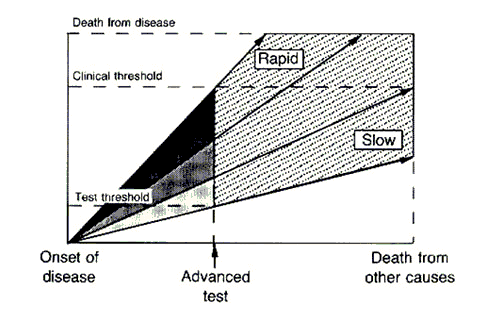

The length of the arrows above represents the length of the detectable preclinical phase, from the time of detectability by the test to clinical detectability. Of six cases of rapidly progressive disease, testing at any single point in time in this hypothetical example would only detect 2/6 tumors, whereas in the case of the slowly progressive tumors 4/6 would be detected. Worse, the effect of length bias increases as the detection threshold of the test is lowered and disease spectrum is broadened to include the cases that are progressing the most slowly, as shown below:

The top image represents an idealized example of disease developing in a cohort of patients by two different hypothetical tests, the first one being the less sensitive standard test and the next one being the “advanced” test, which has a lower threshold of detection. The cases detected by the more sensitive advanced test are represented in the stippled area. The standard test detects only the cases that are rapidly progressive. However, the new test detects all cases, including the ones that are slowly progressive and, if left alone, would not have killed the patient, who would have died from other causes before the tumor became clinically detectable by the “standard” test. These latter two patients would be at risk for medical or surgical interventions that would not prolong their lives and carry the risk of morbidity or even mortality if subjected to the more sensitive test. This is one reason why “screening CT scans” are usually not a good idea.

As the authors state:

Unless one can follow a cohort over time, there is no way of accurately estimating the probability that a subclinically detected abnormality will naturally progress to an adverse outcome. The probability of such an outcome is mathematically constrained, however, by the prevalence of the detected abnormality. The upper limit of this probability can be derived from reasoning that dates to the 17th century, when vital statistics were first collected. If the number of persons dying from a specific disease is fixed, then the probability that a person with the disease will eventually die from it is inversely related to the prevalence of the disease. Therefore, given fixed mortality rates, an increase in the detection of a potentially fatal disease decreases the likelihood that the disease detected in any one person will be fatal.

In other words, early detection makes it appear that fewer people die of the disease, even if treatment has no effect on the progression of the disease. It will also make new treatments introduced after the lower detection threshold takes hold appear more effective:

Lead-time and length biases pertain not only to changes that lower the threshold for detecting disease, but also to new treatments that are applied at the same time. Whether or not new therapy is more effective than old therapy, patients given diagnoses with the use of lower detection thresholds will appear to have better outcomes than their historical controls because of these biases. Consequently, new therapies often appear promising and could even replace older therapies that are more effective or have fewer side effects. Because the decision to treat or to investigate the need for treatment further is increasingly influenced by the results of diagnostic imaging, lead-time and length biases increasingly pervade medical practice.

This month there was a study out of Norway that shows just how variable the growth rate of a tumor can be, a variability that suggests just how difficult it is to optimize a screening strategy that applies to a wide population. Indeed, this study shows that not only are cancers of different organs different diseases, but arguably different cancers in the same organ behave almost like different diseases. In brief, imaging and cancer incidence data were modeled from 395 women and the rates of breast cancer growth thereby estimated. What was found was an enormous variability in tumor doubling times. The mean time for a tumor to double from 1 cm to 2 cm in diameter was 1.7 years. However, 5% of the subjects with breast cancer had tumors whose doubling time was less than 1.2 months. Of course, the doubling of the diameter of a tumor is in actuality an eight-fold increase in tumor volume, which makes this result even more impressive. Not surprisingly, women with such rapidly growing tumors tended to be younger. On the other end of the spectrum, 5% of the women had tumors whose doubling time was greater than 6.3 years. the study also suggested that most breast cancers become detectable on imaging when they reach a diameter of between 0.5 and 1.0 cm. It’s not hard to see how, taken together, this data suggests that no screening regimen is likely to detect a cancer before it reaches 2 cm in diameter except maybe 10% of the time. The converse of this is that women with slow-growing tumors could do just as well with screening every three years. Of course, the problem is that we have no way of knowing who will fall into which category. Such are the complications that have made it difficult to demonstrate a decrease in cancer-specific mortality from mammographic screening. The evidence that it does so in women over 50 is fairly strong; less srong–equivocal, even–is the evidence supporting a decrease in mortality attributable to mammographic screening in women between 40-50.

There is another complication that these more powerful imaging modalities can lead to that wasn’t discussed in the paper, stage migration. This is a phenomenon that occurs when more sophisticated imaging studies or more aggressive surgery leads to the detection of tumor spread that wouldn’t have been noted in an identical patient using previously used tests. This phenomenon is colloquially known in the cancer biz as the Will Rogers effect. The name is based on Will Rogers’ famous joke: “When the Okies left Oklahoma and moved to California, they raised the average intelligence level in both states.” This little joke describes very well what can happen in cancer. What in essence happens is that technology results in a migration of patients from one stage to another that does the same thing for cancer prognosis that Will Rogers’ famous quip did for intelligence. Consider this example. Patients who would formerly have been classified as, for example, stage II cancer (any cancer), thanks to better imaging or more aggressive surgery, have additional disease or metastases detected that wouldn’t have been detected in the past. They are now, under the new conditions and using the new test, classified as stage III, even though in the past they would have been classified as stage II. This leads to the paradoxical statistical effect of making the survival of both groups (stage II and III) appear better, without any actual change in the overall survival of the group as a whole. This paradox comes about because the patients who “migrate” to stage III tend to have a lower volume of disease or less aggressive disease compared to the average stage III patient and thus a better prognosis. Adding them to the stage III patients from before thus improves the apparent survival of stage III patients as a group. The converse is that patients with more disease that was previously undetected, tended to be the stage II patients who would have recurred and done more poorly compared to the average patient with stage II disease; i.e., the worst prognosis stage II patients. But now, they have “migrated” to stage III, leaving behind stage II patients who truly do not have as advanced disease and thus in general have a better prognosis. Thus, the prognosis of the stage II group also ends up appearing to be better with no real change in the overall survival from this cancer.

Does all of this mean that we’re fooling ourselves that we’re doing better in treating cancer? That, after all, is the charge being made. Not at all. It simply means that the question of sorting out “real” effects on cancer survival attributable to new treatments being tested from spurious effects due to these biases is more complicated than it at first seems. For one thing, it points to the importance of carefully matching any experimental groups in clinical trials according to stage as closely as possible using similar tests and imaging modalities to diagnose and measure the disease. These factors are yet another reason why well-controlled clinical trials, with carefully matched groups and clear-cut diagnostic criteria are critical to practicing science-based medicine. It also means that sorting out lead time bias, length bias, and the Will Rogers effect from whether there is actually a better effect from new treatments can be a complex and messy business. If we as clinicians aren’t careful, it can lead to a cycle of increasing intervention for decreasing disease. At some point, if common sense doesn’t prevail (and in the present medical-legal situation, it’s pretty hard to argue against treating any detectable cancerous change), it can reach a point of ever diminishing returns, or even a point where the interventions cause more harm than good to patients. The authors have similarly good advice for dealing with this:

Meanwhile, clinicians can heed the following advice. First, expect the incidence and prevalence of diseases detectable by imaging to increase in the future. Some increases may be predictable on the basis of autopsy studies or other intensive cross-sectional prevalence studies in sample populations. Others may not be so predictable. All types of increases should be expected. The temptation to act aggressively must be tempered by the knowledge that the natural history of a newly detectable disease is unknown. For many diseases, the overall mortality rate has not changed, and the increased prevalence means that the prognosis for any given patient with the diagnosis has actually improved.

Second, expect that advances in imaging will be accompanied by apparent improvements in therapeutic outcomes. The effect of lead-time and length biases may be potent, and clinicians should be skeptical of reported improvements that are based on historical and other comparisons not controlled for the anatomical extent of disease and the rate of progression. Clinicians may even consider that the opposite may be true — i.e., real outcomes may have worsened because of more aggressive interventions.

Finally, consider maintaining conventional clinical thresholds for treating disease until well-controlled trials prove the benefit of doing otherwise. This will require patience. A well-designed randomized clinical trial takes time. So does accumulating enough experience on outcomes from nonexperimental methods that can be used to control for the extent of disease and the rate of progression. From the point of view of both patients and policy, it is time well spent.

These words are just as relevant to day as they were 15 years ago. On the surface, they would appear to support the words of our cranky commenter from the beginning of this post, but they do not. The reason, of course, is that it is quite possible to control for lead time and length bias, the Will Rogers effect, and stage migration, and it’s what clinical investigators do. It’s just difficult, and careful trial design is necessary. Indeed, in carefully controlled studies for a number of cancers the efficacy of our various inteventions against cancer have been demonstrated. In addition, in science-based medicine, unlike the blandishments of “alternative” medicine, we know that there is a cost for every new intervention. The detection of ever-smaller cancers the percentage of which that will endanger the patient’s life we do not know and can only roughtly estimate, leads to increasing numbers of biopsies and treatments that subject the patient to the risk of complications and overtreatment while doing some patients no good even as they may lead to the saving the lives of others. Finding the “sweet spot,” where increased detection reaches a point that maximizes the diagnosis of treatable tumors at an early stage but minimizes the number of “unnecessary” biopsies and therapeutic interventions is a complex business that doesn’t always give the clear-cut answers that our commenter clearly wants.

Compounding the difficulty is that it is very difficult to convince patients and even most physicians that, if we can detect disease at ever lower thresholds that we shouldn’t and that if we can treat cancer at ever earlier time points or ever smaller sizes that we shouldn’t. Moreover, the answer will also not be the same for all tumors. Remember, cancer is not a single disease, but rather a collection dozens, if not hundreds, of diseases. For some tumors (pancreatic cancer, for instance), clearly we need to do better at early detection, but for others (perhaps prostate cancer and breast cancer) spending ever more money and effort to find disease at an earlier time point will yield ever decreasing returns and may even lead to patient harm. It is likely that each individual tumor will have a different “sweet spot,” where the benefits of detection most outweigh the risks of excessive intervention. Similarly, different tumors require different clinical trial designs to rule out the effects of the various biases discussed in this post. Contrary to what our commenter says, it is not only possible to find each sweet spot in terms of early detection versus overtreatment and sorting out the effects of confounding biases, it is imperative that we do so. It’s just that doing so is far more difficult than the frequently simplistic slogans urging more early detection or attacks on “conventional” oncology as not curing anyone because “most cancers don’t progress” would suggest.

To be continued…